There can be no security without data protection

There can be no data protection without security

Of course neither is true. These kind of click-baity absolutist positions are a pervasive internet blight designed to divert attention from critical detail to exploit and divide us…or…less dramatically (touch of fallout from Twitter timeline trauma there) they’re just distracting and singularly unhelpful.

Motivation to write this came from those kind of fact-lite discussions about privacy, the General Data Protection Regulation (GDPR), and the part security plays in scoping work and then getting it done. I’ve watched spats, roasts, and much grinding of virtual teeth while folk I greatly respect try to correct myths and misconceptions e.g “95% of GDPR compliance is encryption”, “ISO27001 = job done”, “Your GDPR certified consultant took our GDPR certified course and can now GDPR certify you”, “No less than compliance will do”, and “Buy this blinky box or FINES”.

This is an ambitious (misguided?) attempt to unpick some of that with a little useful content. Now a post in multiple parts because it just got tooo long. First a look at:

- 1.1 The media backdrop

- 1.2 The Crux: a.k.a what the GDPR really says about security

- 1.3 Security as source of privacy risks: security as a legitimate interest, IP addresses as personal data, and profiling in a security context.

In subsequent installments, we’ll take a closer look at other misleading missives that are helping to perpetuate disagreements and disconnects.

1.1 Wading through security soup

While cybersecurity and the GDPR dominate a roughly comparable weight of more or less click-baity headlines (these are not links, but a quick browse will turn up plenty of examples)…

- Consent: why it’s all you need and why you can’t ask for it

- CPUs are melting

- 100% compliance or bust,

- Ransomware: be afraid, be very afraid

- Our [insert shiny blinky thing here] can make you compliant

- Critical infrastructure is going critical

- GDPR fines will put you out of business

- The Internet of Things will end us

- Advertising is history

- AI: Run and scream, or bet the farm

- Privacy is dead, so meh

…the commercial world isn’t blind. Security is a marketing route into GDPR, and vice (enthusiastically) versa. That leaves your CXOs, lawyers, data protection teams, IT bodies, and security folk wondering what the heck to spend the budget on, because all of them are being targeted by very skilled influencers in different ways.

Confusion stems from security vendors and security experts misunderstanding the GDPR, not filtering out their security bias, or willingly leveraging GDPR furore to drive a security-centric agenda

The result is often inadequate collaboration and implementation paralysis: Different siloed parties developing piecemeal solutions, everyone waiting on everyone else for specialist inputs (and often reporting each other as blockages), while external influencers discretely steer purseholders in TBC directions. All thanks to a lack of adequately skilled and influential bodies with ability to straddle all groups and create a rational consensus. A plan fit for your local operational, strategic, and regulatory risk mitigation purposes.

1.2 The Crux

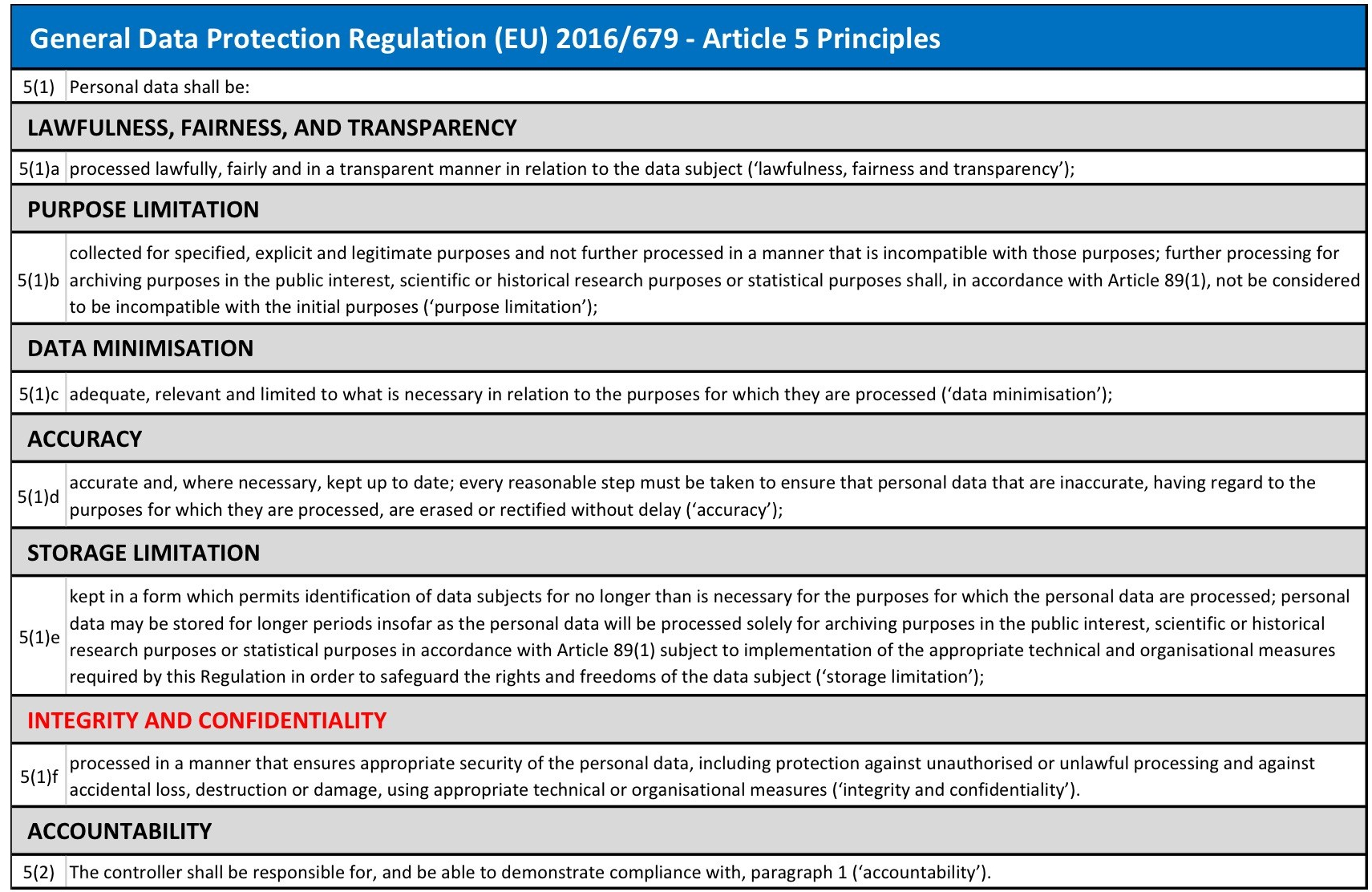

(as per GDPR Article 5: Principles relating to processing of personal data, and Article 32: Security of processing)

As privacy professionals are quick to point out, only ONE principle out of 8 in the UK’s 1998 Data Protection Act references security and the same (on the face of it), is true for the GDPR. However that is only part of the picture. It needs a closer look to see how this shakes down in organisational reality.

Security and Data Protection intersect where:

people, process, or technical controls* are required to minimise the risk of harm to data subjects resulting from a personal data breach

OR business as usual processing

AND

a security functions’ own people, process, or technical controls involve processing personal data

PLUS

security input is needed to assess, oversee, and/or pay for GDPR related change

*not forgetting physical security controls (a common mistake made by many cybersecurity folk).

Below are pertinent GDPR extracts highlighting how responsibility is delegated to organisations to define control appropriateness and adequacy. In future there may be some help from codes of conduct and certifying bodies when (if?) they appear, but don’t hold your breath for anything detailed.

Inherent in that is a requirement to effectively assess risk to both the organisation and potentially impacted data subjects, then document that assessment. If you can’t or won’t do that, you can neither identify and prioritise required control, nor defend pragmatic limitations on the type and extent of controls you implement.

No specific security control type is mandated by the GDPR…really…not even encryption.

Security controls ARE required to minimise the specific local risks of harmful personal data compromise for a given organisation and that organisation’s third parties

No detailed standard of information security control is mandated by the GDPR…yet.

It’s also always worth reading the recital that sits behind each article. In this case Recital 83:

Aiming to be thorough, here’s a PDF document I created, including all of the other mentions of ‘security’ in the GDPR (excepting references to national or public security). None of which contradict the crux of the relationship stated above.

If I had to draw out one fact from everything above that needs to be drilled into the heads of many security practitioners (including me in the early days), it’s this:

Data Protection is NOT just about minimising the probability and impact of breaches

Data Protection IS about minimising the risk of unfair impact on data subjects resulting from historical data processing, processing done today, and processing you and your third parties might do in future.

The Data Protection Bill, specifying how the GDPR becomes part of UK law, reflects all of the above requirements and definitions relating to security. There is no significant variation in either interpretation or current legal derogation, however that may change as it proceeds through the final steps to become law.

1.3 Security as source of privacy risks

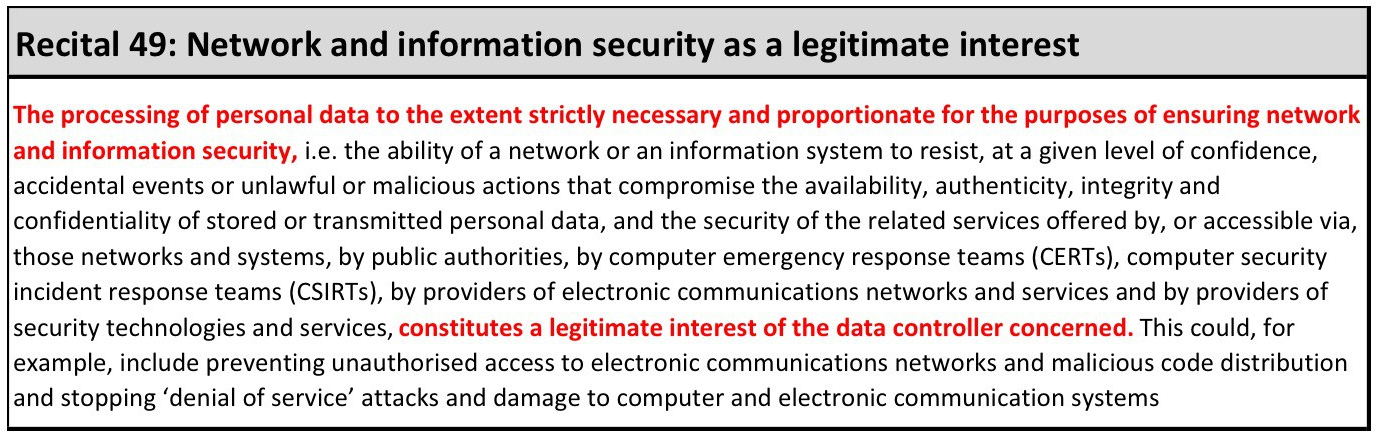

What people must not forget are risks to an individual’s rights and freedoms that can be caused BY information security. This is a less frequent debate, but a lively one, because many people are still unaware that security can be classed as a legitimate organisational interest…

…IF related data processing is sufficiently limited, fair, transparent, and secure, it doesn’t involve automated decision-making, AND it doesn’t involve special data classes.

GDPR Article 6(f) – Link to full Article 6 text

For those not familiar with the concept of legitimate interest, it is one of the 6 legal grounds in Article 6 for processing personal data, including (but not limited to); consent, fulfilling a contract, and fulfilling other legal obligations. Organisations need to choose the most appropriate one for each specific data collection purpose and processing use case.

The headline implication for most security leaders will therefore probably be:

If you meet requirements to claim and defend a legitimate interest in processing personal data for security purposes, you don’t need to obtain consent from data subjects (more on that, including inevitable caveats, below).

If data handled includes data about children you can still rely on legitimate interest, but you have to assess and document diligent consideration of specific related risks. On 21st December 2017 the ICO began consultation on local guidelines for processing data about children under the GDPR. This is the draft and consultation is open until 28th February.

Security as a legitimate interest is still under debate for the public sector as they have been denied use of legitimate interest as a legal basis to carry out their core duties, but security can reasonably be argued to fall outside that direct public service remit.

There is also an untested argument that private and public sector companies could rely on other legal, contractual, or public interest bases for security related processing.

For example, the NIS Directive (The Directive on security of network and information systems) mandates cybersecurity standards for European member states, but scope is limited to critical national infrastructure and other organisations that members deem to be operators of essential services e.g. cloud computing services and online marketplaces

In addition, almost all cybersecurity requirements in contracts and laws are relatively high level. No list of controls or referenced standards can hope to reflect the myriad of threat environments, technologies, operating models, and sizes of organisations. Even when there are granular requirements, their validity doesn’t survive the rapid rate of technological and threat change. All of which limits the usefulness of contracts and other applicable laws as a legal basis for security related personal data processing (thank you to Neil Brown, MD of Decode Legal and telecoms, tech & internet lawyer, for highlighting those points).

Member states are free to add other local exceptions for processing, but that, right now, is the situation.

IP addresses as personal data

Since IP addresses started to be viewed as personal data, IT and security teams have become MUCH more interested in data protection. IPs are not defined as a type of personal data in isolation, but an IP address can help to single an individual out.

We just can’t assume that “technical intelligence” such as IP address resolution is infallible.

From Naked Security post ‘IP addresses lead to wrongful arrests, by Lisa Vaas, 2nd January 2018

Yes, folk with moderate tech awareness and a low privacy risk threshold, might run Virtual Private Networks (VPNs) and browse via TOR, but by association with other data points (e.g. browsing metadata, device info, content geolocation) one could drill down to a postcode, premises, or small group of users and harvest aggregate data that enables harm to the rights and freedoms of individuals. Here’s out-law.com with a far meatier legal perspective on IPs as personal data.

Attempting to work through a processing example to try to make this clearer: say the security team engage a 3rd party to provide a website plugin and service allowing you to positively identify and block devices posing known or likely risks.

Device fingerprinting and profiling for security purposes

Device fingerprinting works by capturing data about devices and browsing sessions to create a unique identifier that allows the device to be tracked across sites and linked to historical activity. It will typically include details like IP address, browser type/configuration, operating system version, and sometimes much, much more (depending on client-side control and how it’s done e.g. some fingerprinting involves proactive interrogation of the connecting device to acquire substantial extra detail). This is independent of cookies which you should now be familiar with.

The GDPR Definition of Profiling

“Any form of automated processing of personal data consisting of the use of personal data to evaluate certain personal aspects relating to a natural person, in particular to analyse or predict aspects concerning that natural person’s performance at work, economic situation, health, personal preferences, interests, reliability, behaviour, location or movements.”

Device fingerprints are compiled and algorithmically judged against a bunch of pre-defined or bespoke criteria. The result is used to determine whether a device is ‘bad’ then alert you so it can be investigated. At the same time the tool may refer to a database of known ‘bad’ devices previously identified by the vendor and the vendor’s other clients

Here’s the register looking at device fingerprinting in the context of advertiser related tracking. They confirm that cookie rules requiring user consent (part of the Privacy and Electronic Communication Regulations – essential additional reading because it interacts with many GDPR requirements) apply to device fingerprinting too, but they also reference this security related exception.

Special data classes and automated decision-making

If you are automatically blocking devices, you need to bear in mind that automated decisions with no human interaction (using algorithms or more basic fixed rules), are a GDPR red flag.

If those decisions could cause legal or other similarly significant effects for data subjects (that is the precise GDPR wording for unacceptable fallout. Do check out some of the links at the end of this section for tips on how to judge), or special classes of data are involved, processing is prohibited by default (see article 9 for limitations on legitimate interest and other conditions for processing special data classes).

It therefore requires explicit consent, or another justifiable legal basis (see the full list of Article 6 legal grounds for processing shown earlier). That’s all enshrined in GDPR Article 22 as a key data subject right

Profiling is only as unbiased and benign as the people who define requirements, collect data, design algorithms, share outputs, and then act on results.

Profiling more generally also comes under scrutiny. The risks associated with bulk algorithmically enabled processing and automated decision-making, especially in the contexts of surveillance and social media, were fundamental drivers for creation of the regulation.

To put those risks and some undoubted information age benefits in better context I would recommend everyone reads this briefing paper presented to the European Parliament by Caspar Bowden. Although written in 2013, the sociological, political, commercial, technical, and ethical points are more than current enough to highlight the challenges and the price of apathy.

The GDPR requires you to retain ability to unpick the algorithmic reasoning, because data subjects have a right to request human intervention in decisions, and to understand how and why decisions are made, to the extent it impacts them. That, with the explosion of innovation in this space, and shortage of people capable of translation, will be an enduring challenge. One I’ve previously written about at length.

Data subjects can still object, and you are still required to protect

Also, although legitimate security and fraud protection interests might negate the need for consent if you can effectively evidence there isn’t potential for significant harm, that doesn’t remove a data subject’s right to object, or the need to implement adequate security controls. If you receive a complaint about profiling, or there’s a related breach, those controls and justifications will really be put through their paces.

Check out the Article 29 Working Party (WP29) guidance on Data Privacy Impact Assessments (DPIAs) featuring profiling as a trigger for assessment, and more specific ICO, WP29, and other related guidance below:

- Article 29 Working Party – Guidelines on Automated individual decision-making and Profiling for the purposes of Regulation 2016/679, wp251

- ICO – Rights related to automated decison-making including profiling

- Bird & Bird News Centre – Article 29 Working Party Guidelines on Automated Decision-Making and Profiling

- Field Fisher Privacy Law Blog – Let’s sort out this profiling and consent debate once and for all

Do your due diligence

As a data controller, a DPIA and more general due diligence should be carried out to confirm the data protection and related data security controls in place at the vendor organisation, and the extent of data captured and accessed by the vendor, the vendors’ suppliers, and other vendor clients.

The WP29 guidance on DPIAs gives a pretty good list of things to cover, including impact of automatic algorithmically triggered blocking and human approved blacklisting. e.g.:

- Is it REALLY necessary? Here’s the UK ICO’s updated guidance on legitimate interest as a legal basis for processing. Quoting from the same:

‘Necessary’ means that the processing must be a targeted and proportionate way of achieving your purpose. You cannot rely on legitimate interests if there is another reasonable and less intrusive way to achieve the same result.

- Are you using data to make automatic decisions about data subjects? If yes you are prohibited by default from processing special data classes for this purpose.

- Will it involve data about children? If yes, what are the potential risks? How can you mitigate significant risk, or reliably remove or redact that data?

- If it does involve special data classes, can you get consent to process that subset of data?

- If you can’t obtain consent, can you remove or reliably redact that data? If you can’t, can you identify another legal basis for processing? If you can’t you need to stop processing the data for this purpose.

- If you can obtain consent, can you cease processing an individual’s special data if consent is withdrawn? If you can’t reliably remove or redact that data subset, consent is pointless, so it is not a fair and appropriate legal basis.

So again, is processing really necessary, and what is the risk?

- How does blocking a device limit future data subject interactions with your organisation and your supplier or partner organisations?

- If you label their device as ‘bad’, are they added to the vendor’s shared bad device list?

- How might that impact their ability to access other online services in future?

- Will the data be shared with other internal departments or 3rd parties for different processing purposes?

- Is any related fallout for data subjects likely to have legal ramifications, or other equally significant impacts?

- Does potential harm that could be caused outweigh the benefit of blocking them e.g. protecting other online service users and associated systems?

You can’t avoid this work. Processing personal data for most security purposes will be a justifiable legitimate interest, but you can’t just wing it with any and all data you can get your hands on, and you have to show your workings out in advance. That MUST include evidence that your organisational interests, or benefits to data subjects, are not outweighed by potential negative impact on individuals, or subsets of data subjects. Why? Because you won’t get away with a hastily scribbled justification when something (as it inevitably will) goes wrong.

When you’ve picked the legal bits out of those pros and cons, the quickest way to informally benchmark compliance is always:

The GDPR Sniff Test

All of the data protection and privacy pros of my acquaintance (including those who work for the ICO) circle back to reasonableness when assessing a justification for personal data processing and associated risks. For example:

You know what the privacy notice says vs the data handling reality

If it was your data, would the privacy notice feel easy to understand and transparent about this data use?

If it was your data, would this data collection, processing, and sharing feel fair and proportionate relative to the declared purpose?

You know the data security risks and control reality

If it was your data, would the security controls in place feel appropriate, and adequate to mitigate unacceptable risk to you, your family, and your contacts?

You know the justification provided for processing and you know how that might impact data subjects

If it was your data, would the stated organisational interests feel like they outweigh all of the things that could go personally wrong?

As with all else, in the end, it comes down to managing risks, only this time, on behalf of data subjects.

Then, moving forwards, it’s about infiltrating the bright ideas factories to hard-wire consideration of required data protection and security control. That, at base, is the whole ballgame: (PSbD)2….a.k.a privacy and security by default and design. Something we can influence organisational culture to respect, but it’s going to take huge amounts of effort and time. Lots and lots and lots of time.

Then, moving forwards, it’s about infiltrating the bright ideas factories to hard-wire consideration of required data protection and security control. That, at base, is the whole ballgame: (PSbD)2….a.k.a privacy and security by default and design. Something we can influence organisational culture to respect, but it’s going to take huge amounts of effort and time. Lots and lots and lots of time.

2. More myths and misconceptions (coming soon)

- Encryption is essential and/or negates the need to apply other controls

- ISO27001 certification = GDPR compliance

- Existing security related risk management is fit for privacy purposes

- Pseudonymisation or Anonymisation negates the need for compliance with GDPR requirements

- A personal data breach will result in GDPR related fines

- Privacy is dead, so why bother?

Apologies to regular readers for the time it took to publish this. Content was kindly reviewed by a legal expert with specific focus on technology and privacy law. However, nothing here should be viewed as legal advice. Please refer to your own advisors before basing any decisions on content.