There is a critical gap for most firms: An inability to interpret and leverage gap analysis, data discovery, and mapping output to actually implement technical data processing change.

This article is about the challenges most large firms are facing when trying to shape a rational, achievable, and sustainable response to some parts of the GDPR. For example:

- Restricting processing for specific data subjects, data classes, and other subsets of data,

- Minimising data collected, data processing, and access to data,

- Actioning a data subject’s right to be forgotten,

- Actioning a data subject’s request to opt-out of marketing and ensuring that decision is recorded and tracked.

- Reducing the risk caused by widespread over-retention of personal data,

and

- Honouring the 72 hour breach notification requirement with accurate enough information about data compromised to avoid additional risk to data subjects, loss of credibility, and severe reputation damage (see the recent Equifax breach for details, including the decision by the IRS today to suspend their contract with the firm).

Challenges centre around system limitations. Gaps in ability to analyse, segregate, and granularly control processing of subsets of data across an organisation. Common enough gaps to cast doubt over ability of some to begin to bring data processing into line with GDPR requirements by the May 25th 2018 enforcement date.

Those who read this blog are very familiar with my attitude to vendor FUD and shamefully creative compliance-nailing claims. I underlined that recently with my opinion on the role of automated data discovery in a GDPR programme. That’s why I want to get this out of the way first in the interests of transparency:

I’m now spending some of my time working with a tool vendor (more on that at the bottom of the post if you are interested). I’m doing so because of the experience I’ve had trying to granularly scope, plan, and implement data processing systems change in big organisations. Let me explain:

Sometime after the beginning:

As you all know there are a plethora of existing data governance and security tools and a hoard of newly emerged (or rebranded) competitors. As part of working to scope change for large GDPR programmes, much time is spent picking the bits out of the highest level objectives to drill, level by level, to something vaguely resembling a functional or non-functional requirement. All to ensure tools will actually do what you need them to. Preparation of tooling requirements, where it’s necessary, usually cuts across the following areas:

- Data discovery, categorisation, & mapping

- Data Protection Impact Assessment (DPIA), workflow, & content management

- Subject request management, workflow, & oversight

- Information asset inventory solutions

- Data collection, consent, & policy management

- Encryption, anonymisation, & pseudonymisation

A colleague misspoke the name of one vendor contender – he called it Tetris – and that’s exactly how this entire process can feel.

123rf.com stock image: Copyright : Mateusz Król

I was a bit of a block wrangling demon in my day, but in this GDPR tooling tango, I was never going to get close to the Russian rocket launch, dancers, and band (showing my age in no uncertain terms). All have more or less functional crossover, but worthwhile ones never quite tessellate into a coherent whole: It’s all about finding and sorting data rather than controlling what’s done with it, or the whole needs so many, you can be at at risk of implementing sometime next decade with no budget for folk to use them.

That’s when it struck me: There’s a critical gap for most firms. An inability to interpret and leverage gap analysis, data discovery, and mapping output to actually implement technical change. Data processing system search, analysis, monitoring, and granular data management capability just isn’t there – not down at a level that understands and interacts with the actual data – and where potential exists to create it, the wholesale development required to make it happen often doesn’t make a blind bit of strategic and commercial sense…at least not in the time left until the May 25th 2018 enforcement date.

In the meantime:

This is by no means the only focus for most. Depending on existing data protection maturity, the top down data RACI, governance forums, steering groups, and initial plans need to get sorted out. Work should be in progress to update all of the main privacy notices and key third party contracts. Policy drafting should have begun. A new DPIA template needs to be produced and integrated with other change assurance processes (work by security, IT and other change stakeholders often has lots of duplication to iron out).

Training has to happen. Primary data collection points should have been identified. Frontline business areas are likely working with the DPO and lawyers to review processing purposes, uses, and associated legal grounds for them. DPIAs on the highest risk processing should be taking shape. Work to pin down inventory requirements with a useful data taxonomy should be progressing.

At the same time folk need to begin the painful job of deciding the fate of the existing marketing database. Trying, in the face of on-going uncertainty, to design a below the line marketing world around explicit consent, with potential for a little legitimate interest and soft opt-in. At the same time standing up plans for related interface, telephony IVR system, script, and process changes.

But, when it comes to data minimisation, data over-retention, bolstering incident response capability, ensuring subject request management capability (especially servicing that right to be forgotten), dealing with ongoing consent and opt-out management, improving encryption, pseudonymisation, and anonymisation capability, and creating a sustainable view of data flows – it gets tough.

And this is the kicker:

For any business without universal data analytics and control capability – people and tools that successfully aggregate, clean, sort, structure, and allow centralised granular analysis and management of all data souping around the business (be honest, who is really there yet?) – there is a gap. A gap that typically requires an enormous depth of specialist local knowledge, time, and money to try and close. Just reviewing the ability of various systems to delete individual or multiple records can take months (assuming your system administrators and others involved have stuff other than GDPR to work on). Not to mention the implications of that for linked data stores and associated business processes.

As for ability to find, pseudonymise, and/or restrict use of a subset of data in an individual record (e.g. special data classes, or convictions data)…that’s just not something most systems are geared up to do.

Multiply that assessment by the number of key data processing systems across your estate, then multiply it again by the usual lead time to plan and stand up a change project (where it’s even within your power to do so, rather than down to a system vendor, cloud provider, or an IT outsource partner)…and you start to grasp the problem.

For businesses with an existing or developing analytics capability, data scientists aren’t automatically tooled up technically, data-structually, or practically to do the GDPR data filtering and control job: All in scope datasets need to be identified and loaded, business logic has to be thrashed out and translated into code, datasets have to be cleaned, existing analytics capability might not reliably identify individual records or data categories, and money may very well be a problem. Even if there are adequate funds, requirements, technical capabilities and bodies not fully allocated to other big data work, often just the time to produce a detailed plan and scope will rule out change before the GDPR enforcement date.

Then you’ve got to ask how quickly and how often business logic and reports can be tweaked when GDPR requirements inevitably evolve after expected clarifications and future test cases.

So this is often the planning reality:

![]() Over there the DPO and legal team decide that all convictions data has to get identified and we have to have capability to segregate it from other data if GDPR rules stay the same.

Over there the DPO and legal team decide that all convictions data has to get identified and we have to have capability to segregate it from other data if GDPR rules stay the same.

![]() Over here most systems administrators haven’t got a Scooby Doo which records or fields include convictions data and those who can find it don’t have a way to strip out those fields or inject specific alternative control.

Over here most systems administrators haven’t got a Scooby Doo which records or fields include convictions data and those who can find it don’t have a way to strip out those fields or inject specific alternative control.

![]() Over here the DP team and lawyers decide that all special classes of personal data need to be encrypted at rest and decrypted access needs to be granularly controlled.

Over here the DP team and lawyers decide that all special classes of personal data need to be encrypted at rest and decrypted access needs to be granularly controlled.

![]() Over here the security and IT architects aren’t even close to making whole database encryption work sustainably for most of the estate, and they are quick to point out that all the data gets decrypted for anyone with a valid set of credentials…either their own, or the ones they stole to hack the business. And selective decryption for authorised users…uh…nope.

Over here the security and IT architects aren’t even close to making whole database encryption work sustainably for most of the estate, and they are quick to point out that all the data gets decrypted for anyone with a valid set of credentials…either their own, or the ones they stole to hack the business. And selective decryption for authorised users…uh…nope.

![]() Over here the marketing team are trying to segregate customers who have given compliant consent, customers who’s consent might be compliant, and customers who definitely should not be marketed to unless the GDPR rules evolve.

Over here the marketing team are trying to segregate customers who have given compliant consent, customers who’s consent might be compliant, and customers who definitely should not be marketed to unless the GDPR rules evolve.

![]() Over here, the marketing database admins have no way to track historical consent status (especially when trying to allow for new granular breakdown between marketing mediums and purposes) and they’re having apoplexy trying to kludge a way to find and segregate what’s ‘safe’ to use, plus a subset of customers where it might or might not be possible to reconfirm consent (a delicate tightrope as evidenced by Flybe and Honda’s clash with the ICO). Suddenly the scorched earth Whetherspoons option is back on the table, together with a huge estimate of lost marketing revenue while marketing data coffers are re-filled.

Over here, the marketing database admins have no way to track historical consent status (especially when trying to allow for new granular breakdown between marketing mediums and purposes) and they’re having apoplexy trying to kludge a way to find and segregate what’s ‘safe’ to use, plus a subset of customers where it might or might not be possible to reconfirm consent (a delicate tightrope as evidenced by Flybe and Honda’s clash with the ICO). Suddenly the scorched earth Whetherspoons option is back on the table, together with a huge estimate of lost marketing revenue while marketing data coffers are re-filled.

![]() Over here the DPO and legal team say it’s time to finally sort the over-retention problem and respect folks’ right to be forgotten. Time to delete, or at the very least, anonymize personal data where there’s no legal reason to keep it.

Over here the DPO and legal team say it’s time to finally sort the over-retention problem and respect folks’ right to be forgotten. Time to delete, or at the very least, anonymize personal data where there’s no legal reason to keep it.

![]() Over here the sysadmins say: “Super. When will it be convenient to corrupt your CRM database?”

Over here the sysadmins say: “Super. When will it be convenient to corrupt your CRM database?”

or

![]() “Anonymize? No problem…if you don’t mind mangling all the up and downstream database tables.”

“Anonymize? No problem…if you don’t mind mangling all the up and downstream database tables.”

and

![]() “We have HOW LONG to understand all potential technical gotchas and system interoperability implications!?”

“We have HOW LONG to understand all potential technical gotchas and system interoperability implications!?”

plus

![]() “Can you tell us all the potential exceptions? Last time we tried this it took 5 months to gather info about valid exceptions, then, at the 11th hour, it got blocked by a director because his team discovered that a piece of strategic change might have a vital dependency on old data”

“Can you tell us all the potential exceptions? Last time we tried this it took 5 months to gather info about valid exceptions, then, at the 11th hour, it got blocked by a director because his team discovered that a piece of strategic change might have a vital dependency on old data”

![]() Over here the DPO and legal team say that data subject requests have to be handled more efficiently (just 30 days for an initial response and – unless you have great excuse – all for free) so finding data and restricting / blocking use has to get better.

Over here the DPO and legal team say that data subject requests have to be handled more efficiently (just 30 days for an initial response and – unless you have great excuse – all for free) so finding data and restricting / blocking use has to get better.

![]() Over here the IT crew say: “Fantastic. How exactly would you like me to implement blanket search, retrieval, and database change capability across our big, complex, distributed, and legacy-riddled estate?”

Over here the IT crew say: “Fantastic. How exactly would you like me to implement blanket search, retrieval, and database change capability across our big, complex, distributed, and legacy-riddled estate?”

![]() Over here the DPO and legal team say that we have to notify a breach to the ICO within 72 hours of discovery. And, to minimise harm to data subjects, reputation damage, and risk of fines or sanctions, we have to quickly understand which records were compromised (see the PR fun for OPM, Talk Talk, Yahoo, and Equifax for details).

Over here the DPO and legal team say that we have to notify a breach to the ICO within 72 hours of discovery. And, to minimise harm to data subjects, reputation damage, and risk of fines or sanctions, we have to quickly understand which records were compromised (see the PR fun for OPM, Talk Talk, Yahoo, and Equifax for details).

![]() Over here the security and IT bods say “Well that depends on accuracy of your data inventory and data flow maps doesn’t it…and how good the SOC, system admins, and business system contacts are at trawling through SIEM events and transaction logs. Allowing for the fact they still won’t have much of a clue what portion of accessible data was actually disclosed or stolen”

Over here the security and IT bods say “Well that depends on accuracy of your data inventory and data flow maps doesn’t it…and how good the SOC, system admins, and business system contacts are at trawling through SIEM events and transaction logs. Allowing for the fact they still won’t have much of a clue what portion of accessible data was actually disclosed or stolen”

A better way?

That’s about the time I became aware of SecuPi. When I first talked to Alon Rosenthal, SecuPi CEO, I was both excited and hugely sceptical. There was surely no way his solution could do everything he said it did. So I put my rabid auditor hat on and started to dig.

Lo and behold it’s not a panacea – nothing is – but what it can do impressed me sufficiently to make me want to collaborate. That and the fact there’s nothing in the market able to do the same thing right now.

To illustrate:

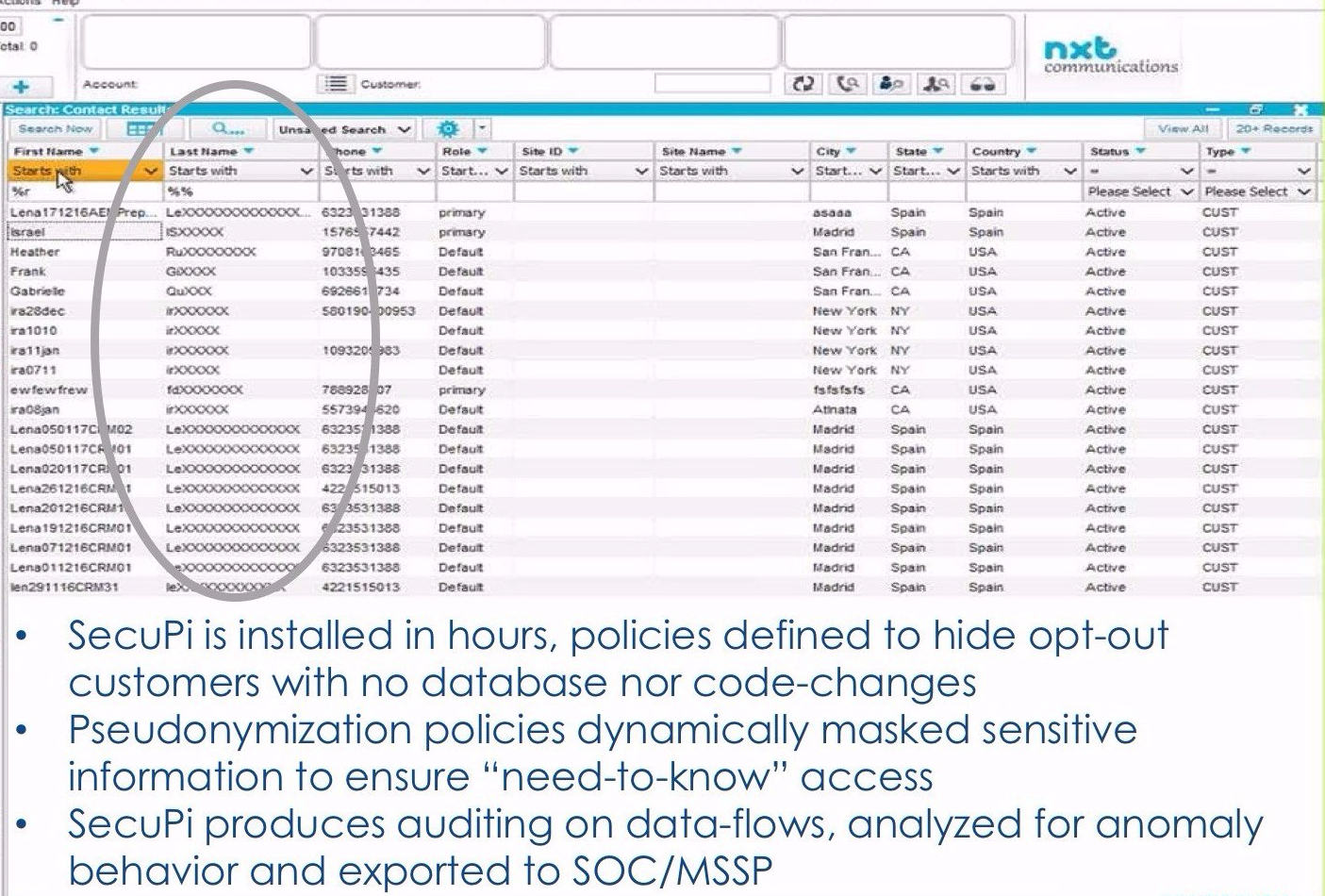

SecuPi uses a lightweight agent installed on servers. It sees what a user requests and then interacts with the request and response to control the data the user does or doesn’t see and how they see it. No database config or code changes required.

In the above example a rule has been set that makes the end user interface, or the admin interface, return partially redacted data for John Smith. It can also be configured so a portion of users don’t see those fields at all, or a subset of users get a null return for the whole record…as if John Smith doesn’t exist in the database.

Something one Supervisory Authority suggested might offer a pragmatic alternative to deleting the underlying data a.k.a virtual fulfilment of a data subject’s right to be forgotten…after all, if the only person who can see or use data is the DPO, what is the risk of ongoing harm to the rights and freedoms of natural persons?

Alternatively SecuPi can interact with databases to delete data, or flag specific content for a system administrator to action later.

Data aggregation, monetization, and digital integration is driving business growth, but you don’t have real-time visibility and control. This is the critical and growing data protection gap that SecuPi helps to fill.

You can trigger control based on user ID, field name, field value, user group, defined data category, anomalous activity alert (see below for more on that), or any rule based on any number of other user, data, category, risk, request or database variables.

It can also deal with unstructured data fields. For example, where your sales team might have noted damaging personal info to help them better manage personal relationships with valuable customers. Those fields can be blocked or redacted for any user who doesn’t need to see them, and access to them will be granularly logged. Allowing you to monitor and review activity, right down to the specific field and field contents.

And that works for all application layer interactions, plus a number of other user / system and system / system interactions Alon can tell you about if you’d like to know more.

Playing well with others

By choice, interactions with data can be encrypted using SecuPi in-built encryption. But what about data at rest? If you want to encrypt that giant data lake being wrangled by your data analysts, you have few options for granular sustainable control. By partnering whole database encryption with SecuPi, data usually decrypted for all valid users by default, can be selectively decrypted, redacted, or hidden.

Or…if you’ve done your feasibility study and whole database encryption is just too processor hungry, complex, and pricey, SecuPi’s ability to restrict and forensically log data interaction provides great compensatory control.

Image Credit: https://www.123rf.com/profile_johan2011 johan2011

SecuPi also dramatically enhances data discovery, categorisation and mapping capability, by providing granular detail about data contained in systems, data used, data moved, users, and patterns of historical and current usage (also a great resource to help refine the to-do list for housekeeping data already hanging around in offline files and emails).

More generally, consider this: When you’ve tidied up offline data, done a best efforts sweep with algorithms more or less successfully guessing what might be personal, how will you prevent it from happening again? (I’ve seen too many one-off efforts do a fantastic job, but fail to sort root cause, so 6 months later you’re back to square one). That’s where SecuPi excels.

How many damaging breaches DO NOT involve data extracted in some way from a structured data store? That system extract stored to the open file share because it’s too big for email. That report done monthly (whether it’s still needed or not) and emailed to a shared mailbox. That dataset sent to a 3rd party so they can do the proof of concept for the data analytics solution. That data (that should be pseudonymised), to update the test environment. The socially engineered PA extracting data to send to the spearphisher. The hacker using compromised credentials to grab whatever they can. The disgruntled leaver scooping customer data to take to a competitor.

SecuPi would see and interact with all of that reporting and extraction activity, applying redaction, blocking, and other control as desired. Control that’s persistently reflected in the extracted data whether the file ends up in an email, on a server, on a laptop, on a thumb drive, or abandoned on a printer.

SecuPi can also interact with the extraction process to add attributes to common file types. Attributes signalling classification and content to help other Document Rights Management or Data Loss Prevention tools control access and data movement.

The only place it’s harder to get equivalent control is the command line. SecuPi will see requests if you install a SecuPi agent on the user’s machine, or integrate control with solutions aimed specifically at god-like access (allowing for the fact all access is covered by SecuPi if it’s via an application layer admin interface, and lots of new functionality is in the pipeline).

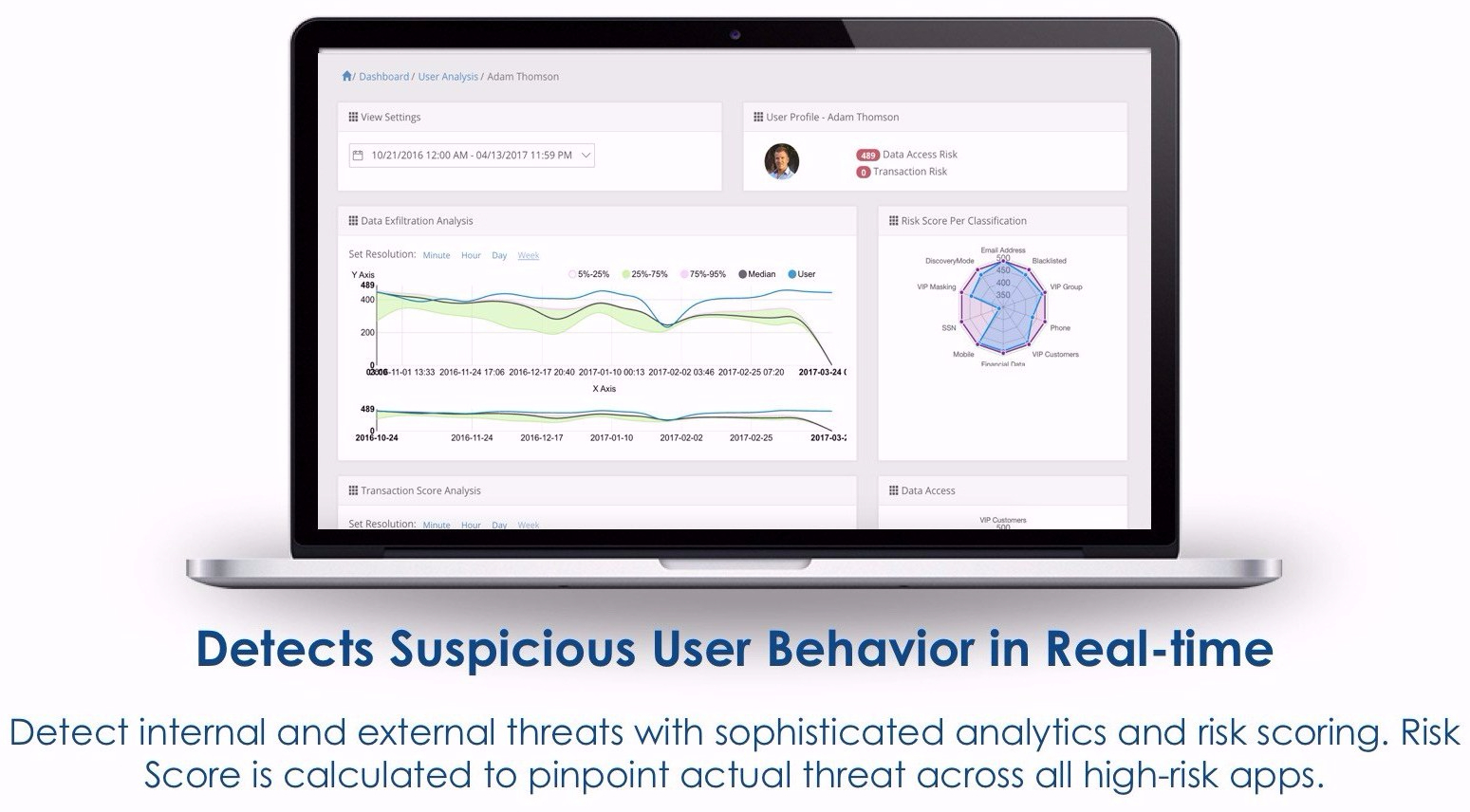

Probably more to the point SecuPi can log activity, then, if usage looks suspicious, apply immediate control. All outputs are easy to integrate with other data monitored by your SOC or security team and allow them to drill down to details of data accessed.

Are you sure they gave consent?

There are other ways that SecuPi can cleverly supplement other control capability and they work with a number of solution providers who provide niche value-add across the data estate.

For instance, SecuPi can centrally block or limit use of data belonging to customers who don’t consent, opt-out, or object to specific data uses (and giving customers a granular choice is a GDPR requirement), but historically firms have not done a great job tracking consent status through the whole lifecycle of customer relationships (one of the things contributing to the marketing migraine, because you have to be able to prove appropriate consent was given).

SecuPi uses information on consent to trigger redaction, access blocks, or other desired controls wherever necessary. That can be supplemented by a smart solution that tokenises and centrally stores timestamped records of consent and consent updates at any required level of granularity (e.g. one answer for direct email, and another for telephone marketing, or agreement to marketing for products supplied by one partner firm, but not another).

That can add up – bearing in mind it is platform agnostic, no code changes are required, and initial implementation often only takes days – to a game-changer.

Other solutions may attempt the same using transaction logs, or specific plug-ins for proprietary platforms, but they don’t do it how SecuPi does it. That can impact performance, limit how much of your data estate it’s possible to cover, and most don’t understand that granular application layer data context, Things to watch out for in vendor pitches

If you don’t have the ability to adequately minimise access to databases and minimise the personal data available to authenticated users, you are not meeting GDPR requirements and you are not managing the vast proportion of your residual data disclosure, damage, misuse, and/or data theft risk. Of course, if you work in a small firm, the risk likely doesn’t warrant this kind of solution, but when you deal with millions of records and thousands of users, the risk and difficulty maintaining control escalates.

Then there’s the other value that SecuPi adds with the User Behaviour Analytics and forensic logging of granular interactions between user and data. Nothing else I’ve seen can given you so much detail on so much data processing activity.

If John in the customer service team usually accesses an average of 50 customer records per day to service customer calls, and John suddenly starts accessing hundreds or even thousands of personal data records there may be a problem. Alternatively, John’s access profile might not vary on the surface, but instead of requesting a report on a variety of customers, he starts focusing solely on high net worth customers…another potential red flag. A flag that SecuPi (unlike solutions that focus on transactions metadata) could be configured to recognise.

After all, insider threats come in all kinds of shapes and guises.

If SecuPi logs don’t show any naughtiness going on, it still provides rich information about who accesses data, the normal patterns of data access, data contained in any data store it can see, and how data is moving between systems. If you have spent a small fortune mapping data flows and populating a data inventory, this is your way to ensure that’s accurate and keep that up to date.

In addition, and this is me speaking as a security risk manager who despaired, it can add a data perspective into all of the other monitoring and alerting done by your SOC or security team. I can’t tell you how many times folk have come to me with a new vulnerability, told me it’s game-changingly bad, but can’t give me any context for the data and high availability systems potentially at risk bearing in mind layered existing controls. A.k.a I’m being asked to set hugely expensive rabbits running with no way to articulate the scale of the risk.

The number of people with access to data has been shown to have a reliable statistical correlation to the frequency of data breaches according to data from Vivo Security (see the graph above). That feels like an obvious thing to say, but with statistics you can quantify that information for a risk conversation. Something folk in the data protection and security space constantly struggle to do.

SecuPi logs give you invaluable real-time and historical insight into daily scale of data use and exactly what’s accessed. The kind of insight that helps to refine pre-existing IdM, vulnerability management, and threat assessment controls, along with related risk appetites and policies.

Couple that with SecuPi rules engine logs, and you have a granular, real-time, and historical record of the control that has been applied. A godsend when audit comes calling and if you ever need to explain yourself to a regulator.

Last, but not least, control implemented by SecuPi (except that physical deletion of records in databases), can be quickly changed via the SecuPi dashboard. That’s frequently not the case (and the cause of a much of nervousness about pushing the ‘GO’ button for change) when you are making best efforts to directly change systems, or building analytics capability to make things work.

Just a few reasons why I’m happily helping to keep SecuPi honest about GDPR control claims, while still working as an independent consultant to help clients with the people, policy, and process parts of this pervasive regulation.

So, I hope you forgive me ceding some of my hard won independence for this. Bearing in mind the number of piecemeal and downright poor offerings out there, offering a leg up to something that helps this much felt like the right thing to do.

By all means drop me a line if you’d like to know more (sarah@secupi.com for my new vendor alter ego), or do get in touch for a chat about all the other parts we also can’t ignore.